Calculating Required Resources (Section Still Under Construction)

The following issues as much as they seem simple and easy as much as they are very important for the researcher to master. Once the researcher starts getting assigned projects he must quickly asses on a short period of time the applicability of the assigned project on the available resources .There are several issues that need to be understood by the researcher.

1- Number of available cores.

2- Data Storage Capacity.

3- Graphics card limitations.

4- Time limitations.

5- Simulation detail.

2- Data Storage Capacity.

3- Graphics card limitations.

4- Time limitations.

5- Simulation detail.

Some people will ask what is the definition or meaning of time step, why is not all the time taken as 1.

Need to note that the following discussed points give linear estimates for the resources will as a matter of fact the calculated values should take more of a second order curve plot.

Simulation Types According to Size

They can be characterized in the following manner.

1- Small Sized Simulation.

This is done on 2 core to a 4 core machine.

2- Medium Sized Simulation.

This is done on 4 core to a 7 core machine.

3- Large Sized Simulation.

This is done on 10 to a 20 core cluster machine.

4- Super Large Simulation.

This is done on 50 to 100 core cluster.

6- Super Super Large Simulation.

This is done on 100 to 300 core cluster.

Useful

http://insidehpc.com/hpc101/intro-to-hpc-whats-a-cluster/

http://verde.esalq.usp.br/~jorge/cursos/ramiro/Ox_packages/OxMPI/grid.pdf

http://statistics.berkeley.edu/computing/servers/cluster

http://manuals.bioinformatics.ucr.edu/home/hpc

http://verde.esalq.usp.br/~jorge/cursos/ramiro/Ox_packages/OxMPI/grid.pdf

http://statistics.berkeley.edu/computing/servers/cluster

http://manuals.bioinformatics.ucr.edu/home/hpc

Storage Resources

During research you will find it very difficult to get the idea to other researchers about the occuring restriction, these simulations take lots of time, resources and storage facilities, so you need to make an assessment calculation in order to show your boss the applicability of the problem and how realistic are the targets, the steps for that are as follows:

1- Conduct a calculation for one times step.

2- Check how much is the generated data file for that calculated one second, in the save folder.

2- Check how much is the generated data file for that calculated one second, in the save folder.

The required formula

Total_Generated_Data_for_the_whole_Required_Simulation= Number of Seconds*Generated_Data_for_One_Second

Example

For a computer having a storage capacity of 2 Tera bytes, these are distributed on two hard drives having the capacity of 1 Tera byte, Knowing that to conduct a simulation of one second generates 125 Gega bytes for 50 seconds, how much will 360 seconds generate of data?

Solution

Data_Generated_for_360_Sec=Simulation_for_360_Sec*(Data_Generated_for_50_Sec/Simulation_for_50_Sec)

Data_Generated_for_360_Sec=360*(125/50)

Data_Generated_for_360_Sec=900 Giga bytes

This means that you will need one of the 1 Tera bytes hard drives to be free from stored data for the calculation process, this proves the importance of pre-planning for the calculation process so that the long calculation would stop abruptly.

Available Number of Cores

The number of available calculation cores plays a major role to get through the project on time (for a parallel code, not all codes can be run on parallel mode)

Total_Required_Time_for_Simulation=Number_of_used_Cores*One_Core_Calculation_Time

Example

For a 7 core machine

Solution

The solution will follow the following steps..............

Time Required for the Whole Calculation

For a static time stepping case the problem is straight forward, that can be done through the following steps.

Example

For the calculation of 50 time steps requires 4 hours and 4 minutes (14640) on a 6 core machine, what would be the required time for a 360 time step simulation.

Solution

Calculation_Time_360_Time_Steps=Calculation_Time_50_Time_Steps*(Time_Steps_360/Time_Steps_50)

Calculation_Time_360_Time_Steps=(14640 seconds)*(360/50)

Calculation_Time_360_Time_Steps=29.28 hours

Shortcuts to Shorten the Time of Calculation

The following steps can be of great help.

1- Manually reducing the number of iteration loops for each time step.

2- Assigning a function the reduces the number of iterations for the simulation with the progression of time steps.

2- Assigning a function the reduces the number of iterations for the simulation with the progression of time steps.

Example

Will be written shortly.

Solution

The solution will follow the following steps..............

Shortcuts to Reduce the Required Storage Capacity

You have the option to save at each time step, but what you will find is that for a meshed domain having a 1 million number of cells would require a big storage capacity going to about 250 giga bytes for each time step of the simulation. One way around this problem if you have a 100 second simulation, you might make your saving order of every 10 seconds that would drop your storage space 90%.

Example

Knowing that to conduct a simulation for 50 second time step generates 125 Gega bytes of data, how much will the generated data will be for 360 time stepped simulation that saves ever 10 time steps?

Solution

The researcher needs to keep in mind that the software by default saves the first and last time steps of any simulation.

Required Graphics Card

Knowing what graphics card for your problem is also another challenge because at some point you will need to tell your boss the limitations of the project or he will keep asking you for more. The problem comes when you start uploading the times steppes into the analysis software, so in order to get a concentration plot for 360 seconds you might require uploading to the graphics card 5 Tera bytes of data which is something impossible causing the computer to crash.

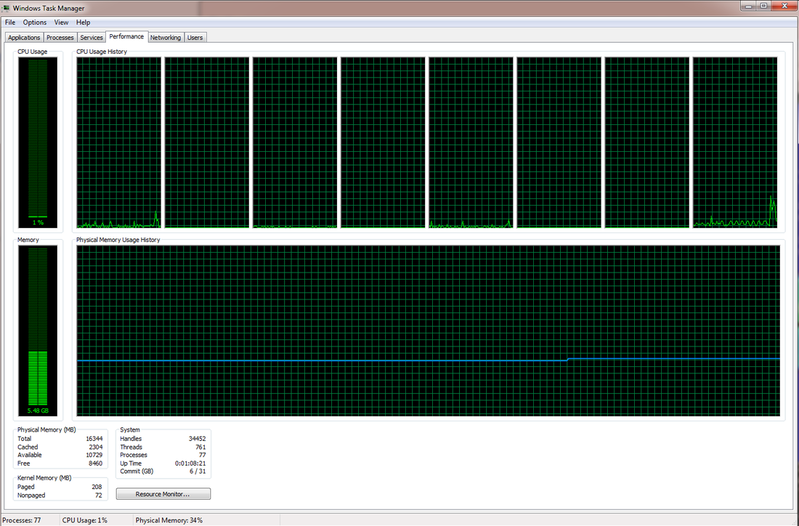

The question that comes to mind how can the researcher find how much RAM storage area has been used and from that he will find how much is available, this is done through pressing the alter control delete button to access the task manager, do it before you load your simulation data and check how much RAM was used before and check after.

The question that comes to mind how can the researcher find how much RAM storage area has been used and from that he will find how much is available, this is done through pressing the alter control delete button to access the task manager, do it before you load your simulation data and check how much RAM was used before and check after.

Problem

For a 16 giga byte RAM computer how much will it be able to handle for a generated data of a simulation, this is for a windows machine (will mention the details for a Linux machines). The first example case is for a 8 number node mesh.

Solution

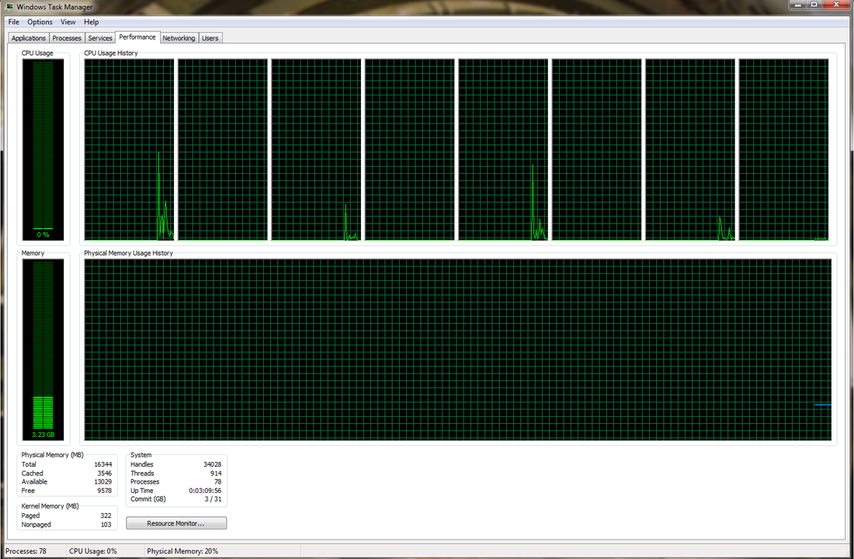

The following picture shows before loading the data:

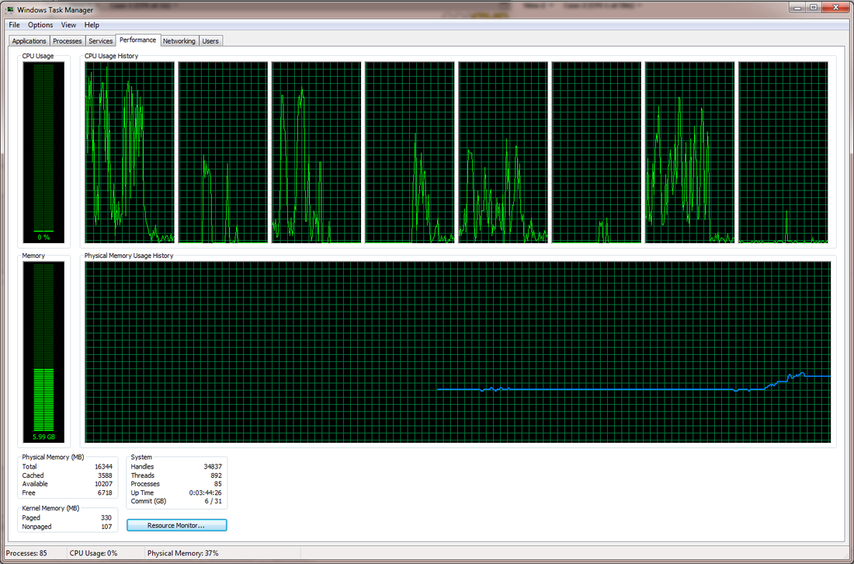

The following picture shows after the data is loaded:

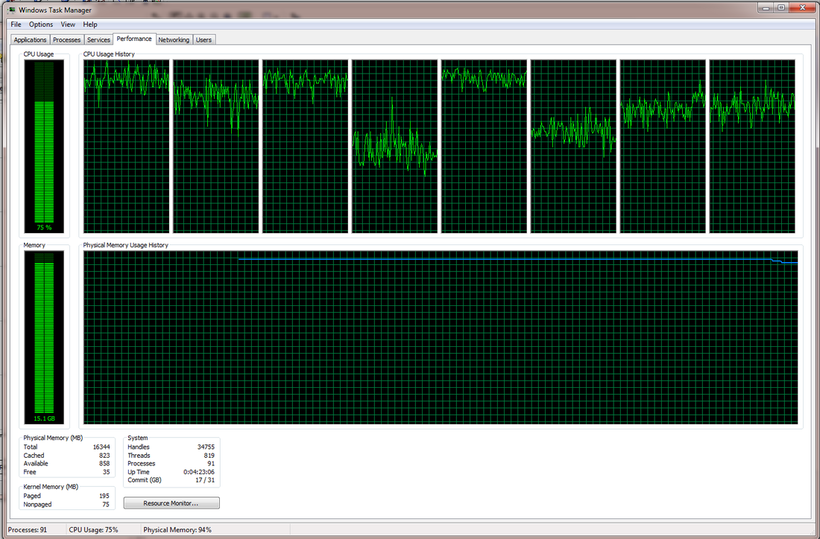

The following calculation is shown for a six core calculation, what is visible that all core are conducting the simulation. Notice that nearly 15.1 Gig RAM is used out of 16 Gig RAM, this shows that the simulation is on the verge of crash.

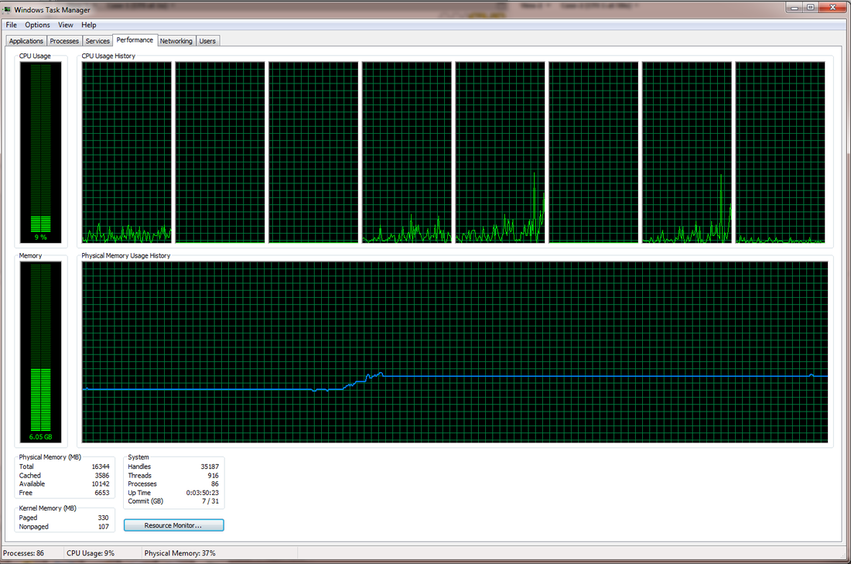

The following graph shows after the calculation process being conducted, that the generated data file is 10 giga byte, we can see that nearly 6 GB of RAM is used therefore that means about 60% of the data has been loaded. (steady case, case studied inner dynamic outer static).

Useful Youtube video relating to the resources issue

Unless otherwise noted, all content on this site is @Copyright by Ahmed Al Makky 2012-2013 - http://cfd2012.com